AI's next bottleneck isn't the model. It's your brain.

BCI. Probably the most unpredictable space in tech.

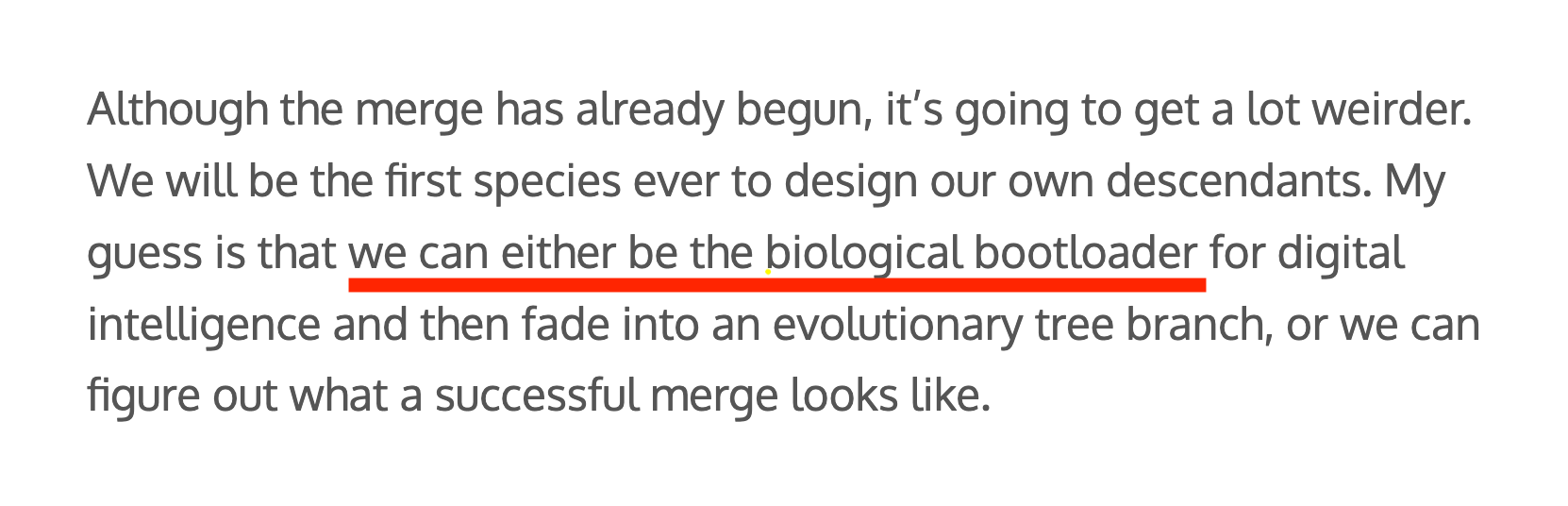

OpenAI is reportedly incubating a BCI (Brain-Computer Interface) startup, Merge Labs, with Sama himself as co-founder. If you've read his 2017 essay The Merge, you saw this coming.

Back then, he argued humans would either:

- Be the "biological bootloader" for AI and fade into irrelevance, or

- Find a way to merge with it.

Well, increasingly BCI seems to be that merge.

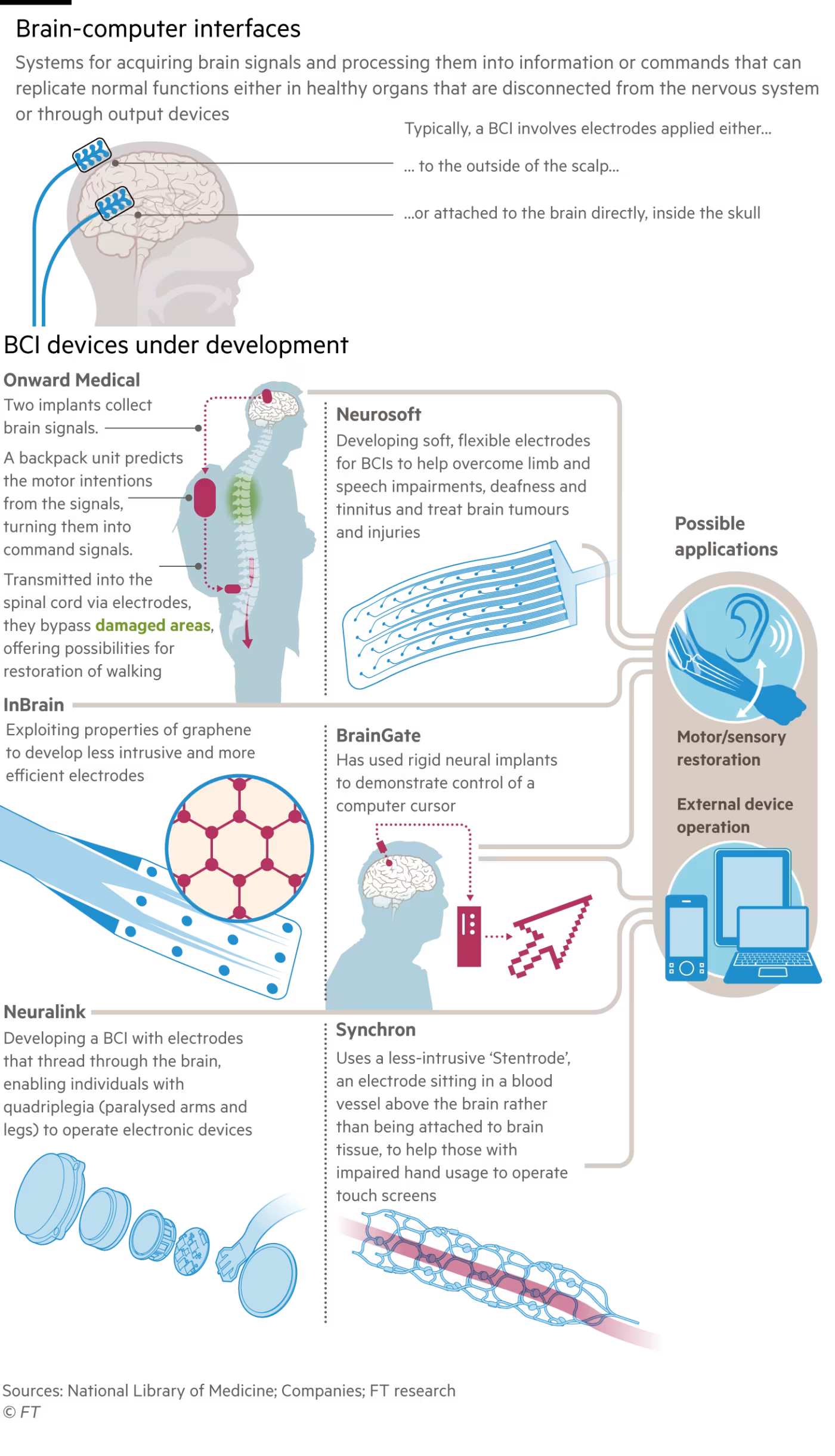

Not just for paralyzed patients moving cursors, but for healthy humans streaming thoughts, senses, and context straight into AI, bypassing the keyboard, mic, and every other I/O bottleneck in the stack.

Right now, we're obsessed with model performance. Sure. But soon, the model will outpace the bandwidth we can feed it.

The real ceiling won't be its IQ; it'll be the brain-to-machine pipe.

As AI demands richer context, that pipe must widen. You can't unlock full intelligence if you can only send it one prompt at a time.

That's why:

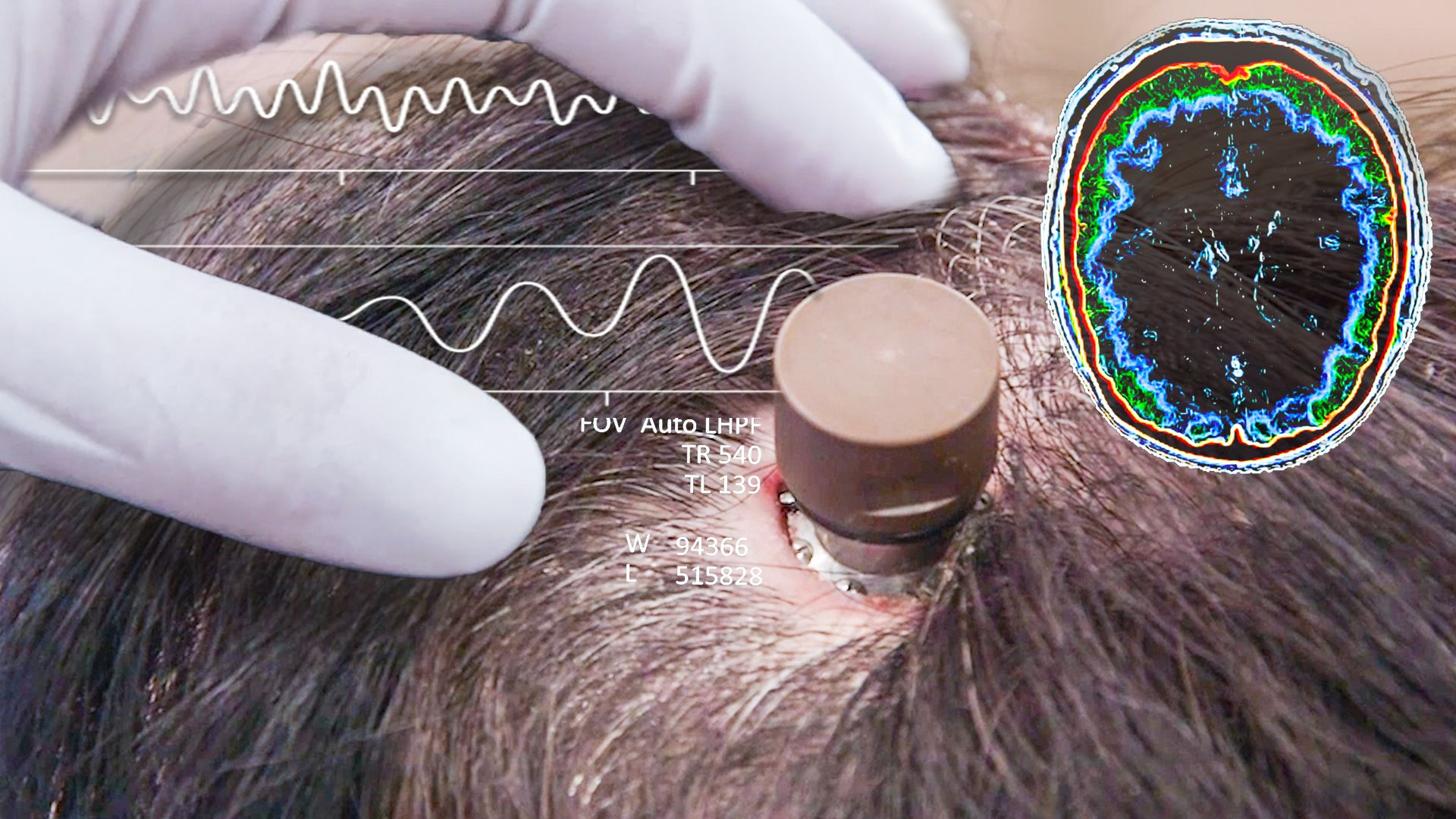

- Neuralink drills into skulls.

- Bezos- and Gates-backed Synchron threads electrodes through blood vessels, with Apple onboard.

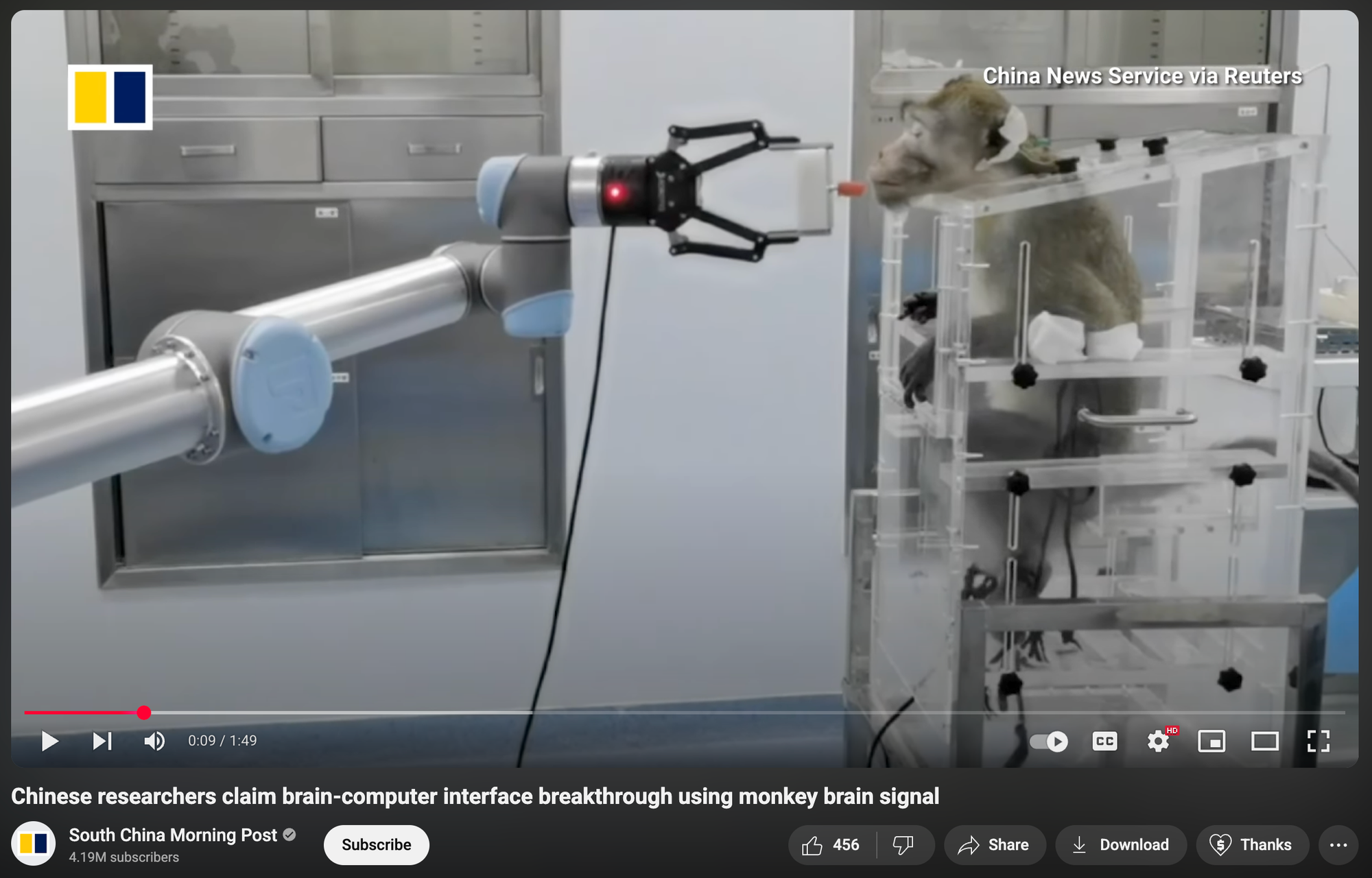

- China is speedrunning near-simultaneous(!) animal + human trials, likely to ship "real users" first.

Different methods. Same endgame.

If BCI clears the regulatory wall, the UI flips: we don't "use" AI; we co-process with it.

- Input = thought + gaze + ambient context.

- Output = action, not apps.

The human-to-data pipeline gets rewritten from scratch.

"Chips in brains" is, for now, a revolting image.

But if model performance gets capped by human input speed, and the choice is:

- Invasive implants with near-perfect performance, or

- "Good enough" wearables with anemic UX and ~20% of the capability,...

...it gets harder to ignore option 1.

Have I just watched too much Black Mirror?