Sora won't stay slop machine forever

Vision will soon reason, just like language before it.

There's no denying it: OpenAI Sora's feed is pure dopamine sludge.

Most of it borderline unwatchable while frying your brain.

But the research underneath, thankfully, points the other way.

DeepMind's new paper on Veo 3 is signaling we may have reached the "hello world" moment of visual reasoning.

So what are Veo 3, Sora, and their ilk actually cooking under all that slop?

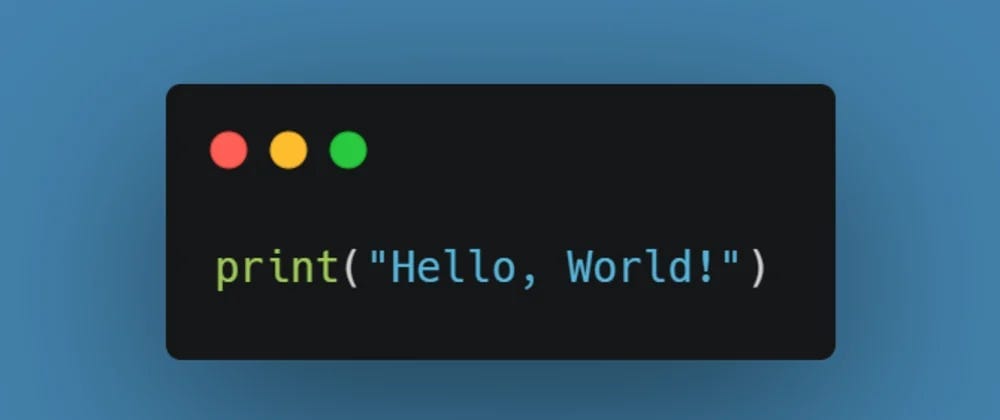

Veo 3 builds intelligence across four rough rungs. It:

- Perceives (edges, masks, denoising),

- Models (physics, materials, light),

- Manipulates (edits and transforms that hold up),

- Reasons (goal → sequence → outcome).

It doesn't just render pixels. It reads a scene, maintains a world model over time, applies targeted changes, and plays out a plan.

Given only a prompt and a still image, the model animates through tasks once reserved for bespoke CV stacks. No bespoke heads, no fine-tunes.

Just describe the task and let the frames do the work.

You can see the vision-native edge here. When you render a maze as text, a top-tier LLM can certainly hang. But flip that same maze into an image, Veo 3 cleanly wins.

Which checks out.

Planning lands better when you start in pixels, not tokens, and that's where most of our real-world work lives anyway.

In practice, it plans: perceive → update → check → continue. That simple loop recurs everywhere, from mazes and object extraction to rule following and image manipulation.

Veo 3 also improves materially with cheap retries, the same way LLMs jump from pass@1 to pass@k.

Operationally, that shows up at the prompt layer.

Prompts now leave fingerprints you can quantify. For instance, a green backdrop beats white on segmentation, likely a learned keying prior, and benchmarks back it up. Veo 3 posts strong mean Intersection over Union (mIoU) on instance segmentation and clears small puzzles that stalled earlier versions.

It echoes the early LLM era circa 2022, only this time for Visual Foundation Models (VFMs).

Once a generalist clears "good enough" across the long tail, the incentive shifts from wiring dozens of point tools to orchestrating one model with verifiers and a retry budget.

In the near term it'll still look like creator toys, but the durable value is universal visual operators embedded into real workflows, such as:

- product imagery that updates to spec,

- QA that evaluates motion not just pixels,

- UI automation written in plain English,

- robot demos distilled straight into action logic.

The moat won't just be a cute feed.

It will be the control stack around the model: task phrasing, memory, verifiers, and cheap sampling that turn today's Sora thirst trap into a system you can ship and hang a KPI on.

For now, Sora's invite frenzy is the biggest headline, with "invite" codes going for ~$500(!) on eBay.

But the line to watch is this:

- Once framewise reasoning hits stable accuracy at short clip lengths, standard resolutions, and sane costs, a single VFM begins subsuming much of CV, the same way LLMs displaced bespoke NLP.

Just as language had its turn under large models, vision's up next.

…

and then there's Gary Marcus.

He's probably not gonna love hearing this, but Marcus, if you're listening,

we're still in inning one.